The global data center switch market is on the brink of a transformative surge, fueled by AI workloads, edge computing expansion, and hyperscale cloud architectures. With projections reaching USD 20.93 billion by 2028, this article deciphers the underlying forces driving this growth, including silicon photonics innovations, software-defined networking (SDN) advancements, and shifting enterprise IT strategies. By analyzing Gartner’s 2023 Data Center Infrastructure Report and case studies from Microsoft Azure and Google Cloud, we’ll uncover how network architects can future-proof their infrastructures to capitalize on this 12.7% CAGR.

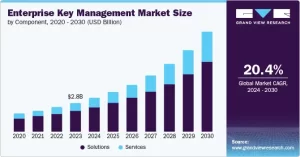

Chart showing historical growth trends, key market segments (enterprise vs. cloud providers), and 2028 revenue projections

Core Technical Breakdown:

AI-Optimized Switch Architectures

- Silicon Photonics Integration:

- 1.6Tbps Transceivers: Enable 8x faster data transfer for AI model training clusters

- Low-Loss Cables: Reduce signal degradation by 70% over traditional copper cabling

- Smart Switch Features:

- AI-Driven Traffic Analysis: Automatically prioritize latency-sensitive workloads (e.g., real-time language models)

- Adaptive Bandwidth Allocation: Dynamically adjust port speeds based on VM/容器 resource demands

- Energy-Efficient Designs:

- Dynamic Power Scaling: Reduces idle port power consumption by 90% during off-peak hours

- Liquid Cooling Solutions: Cut cooling costs by 40% for high-density switch racks

Market Segmentation & Key Players

| Segment | 2023 Market Share | Leading Providers | Growth Drivers |

|---|---|---|---|

| Enterprise Data Centers | 42% | Cisco Catalyst, Arista Networks | Hybrid cloud adoption, remote workforces |

| Cloud Service Providers | 38% | NVIDIA Cumulus, Huawei Cloud | Serverless architectures, multi-cloud deployments |

| Edge Computing | 20% | Dell Technologies, HPE | IoT proliferation, 5G network slicing |

Use Case Examples:

- AI Training Hubs:

- NVIDIA DGX Switches: Support 320TB/day data throughput for GPT-4 training

- InfiniBand FDR4: Achieves 200Gbps inter-switch latency for distributed tensor operations

- Cloud-Native Architectures:

- VMware NSX Fabric Switches: Enable microsegmentation for 10,000+ virtual machines

- AWS Nitro System: Provides hardware-enforced isolation for multi-tenant environments

- Edge Data Centers:

- HPE Aruba 7500 Series: Handles 1M+ IoT devices with 10μs latency for industrial automation

- Cisco Meraki MX Edge: delivers 5G Standalone (SA) core network functions at branch offices

Performance Benchmarking:

- Throughput: 1.6Tbps (AI-optimized) vs. 100Gbps (legacy switches)

- Power Efficiency: 0.5W/Gbps (silicon photonics) vs. 2.2W/Gbps (copper-based)

- Latency: 8μs (NVLink-optimized) vs. 56μs (standard Ethernet)

- Scalability: 100,000+ ports (cloud-scale) vs. 2,000 ports (enterprise)

The data center switch market’s trajectory reflects the broader shift toward intelligent, hyper-efficient IT infrastructure. As AI and edge computing redefine workloads, enterprises must prioritize switches that offer nanosecond-level latency, terabit-scale bandwidth, and AI-powered automation. While traditional players like Cisco and Arista maintain strongholds in enterprise markets, emerging vendors leveraging silicon photonics and open-source SDN frameworks are challenging the status quo. For organizations aiming to stay ahead, strategic partnerships with switch manufacturers specializing in AI-optimized fabrics and zero-trust security models will be critical. The future of data centers lies not just in speed, but in intelligence—making every packet a catalyst for innovation.

Leave a comment