In today’s data-driven world, servers are the unsung heroes powering everything from enterprise applications to global cloud networks. While their complexity can seem overwhelming, the core components that define a server’s performance and reliability boil down to four critical elements: the CPU, memory, storage, and RAID (Redundant Array of Independent Disks) technology. Understanding how these components interact is essential for IT managers, system architects, and businesses aiming to optimize their infrastructure. This article breaks down each element’s role, highlights cutting-edge advancements, and reveals why balance is key to building a future-proof server ecosystem.

The Heartbeat of a Server: CPU and Memory

At the core of every server lies the CPU (Central Processing Unit), acting as its brain. Modern server CPUs, such as Intel’s Xeon or AMD’s EPYC processors, are engineered for parallel processing, handling thousands of tasks simultaneously. For instance, a cloud provider might deploy multi-core Xeon Scalable processors to manage millions of user requests across virtual machines. The CPU’s clock speed, core count, and cache size directly impact workload efficiency—whether it’s crunching financial data or rendering real-time analytics.

Equally vital is memory (RAM), which serves as the server’s short-term memory. While disks store data permanently, RAM allows instant access to active applications and processes. High-density DDR4 or DDR5 modules enable databases to serve queries in microseconds, reducing latency for high-frequency trading systems or e-commerce platforms. However, balancing CPU and memory resources is critical: Overinvesting in cores without adequate RAM can lead to bottlenecks, while excessive memory with underpowered CPUs wastes resources.

The Storage Revolution: From HDDs to NVMe SSDs

Storage defines a server’s long-term data retention and retrieval capabilities. Traditional Hard Disk Drives (HDDs) rely on spinning platters and magnetic surfaces, offering cost-effective bulk storage for archival data. However, Solid-State Drives (SSDs), particularly NVMe (Non-Volatile Memory Express) variants, have redefined speed and reliability. A media streaming service might use NVMe SSDs to cache popular content, ensuring instant playback for millions of users.

The choice between HDDs and SSDs hinges on use cases:

- HDDs excel in cost-sensitive, high-capacity scenarios (e.g., backup storage).

- SSDs dominate latency-sensitive applications like AI training or transactional databases.

Emerging technologies like 3D XPoint (used in Intel Optane) bridge this gap, offering hybrid solutions with near-SSD speed and HDD durability.

RAID Technology: Balancing Performance and Redundancy

No discussion of server storage is complete without RAID cards, which orchestrate disk arrays to enhance performance and fault tolerance. RAID configurations—such as RAID 0 (striping for speed), RAID 1 (mirroring for redundancy), or RAID 5/6 (parity-based)—determine how data is distributed across drives. For example, a healthcare provider might deploy RAID 6 on its diagnostic imaging servers to protect against dual disk failures while maintaining compliance with HIPAA regulations.

Modern RAID controllers integrate AI-driven predictive analytics, alerting administrators to potential drive failures before they occur. This proactive approach minimizes downtime for mission-critical systems, from financial trading platforms to industrial IoT networks.

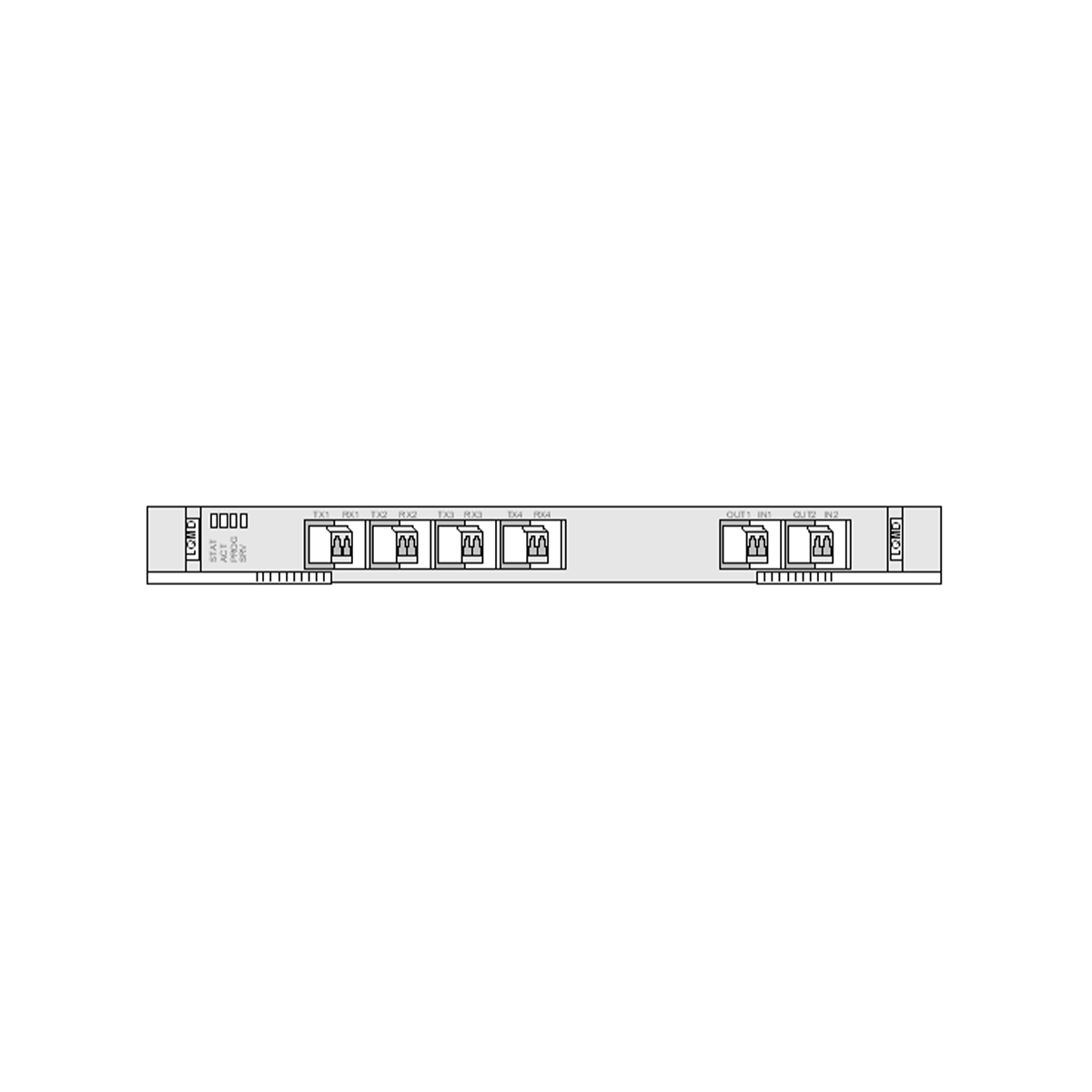

Image description: A detailed diagram illustrating the flow of data between a server’s CPU, RAM, NVMe SSDs, and RAID controller, highlighting how components collaborate during read/write operations.

Overcoming Common Challenges

One persistent challenge is thermal management. High-performance CPUs and dense SSDs generate significant heat, which can throttle speeds or cause hardware failure. Liquid cooling systems and optimized airflow designs are now standard in hyperscale data centers. Another hurdle is cost optimization—for startups, investing in enterprise-grade NVMe drives for all workloads may be prohibitive. Tiered storage strategies, where frequently accessed data resides on SSDs and archives on HDDs, offer a balanced compromise.

The Future: Convergence and Customization

The line between server components continues to blur. AMD’s EPYC CPUs now integrate RDMA (Remote Direct Memory Access) capabilities, reducing latency in hyper-converged infrastructures. Meanwhile, NVMe-oF (NVMe over Fabrics) allows SSDs to function as networked storage, blurring the boundaries between local and remote storage. For businesses, this means greater flexibility: Customizable server builds let IT teams tailor components to specific workloads, whether deploying edge computing nodes or AI training clusters.

A server’s power doesn’t come from individual components alone—it’s the synergy between CPU, memory, storage, and RAID technology that delivers unmatched performance. As industries demand faster, more reliable infrastructure, understanding these elements empowers organizations to make informed decisions. Whether upgrading legacy systems or building cloud-native architectures, aligning hardware choices with strategic goals ensures scalability, security, and longevity. In the ever-evolving tech landscape, knowledge isn’t just power—it’s the foundation of innovation.

Leave a comment